The AEC sector is still at its infancy of its the much anticipated move from Industry 3.0 to Industry 4.0. This implies the introduction and development of technologies, in the near future, that will facilitate intelligent and real-time interactions between people, information, and representations across actual and virtual worlds and which has the potential to truly revolutionise how we design, build and operate in our sector. This project proposal focuses on the combined potentials of processing real-time video image data into eXtended reality (XR) applications, for the AEC sector.

XR technologies are expected to grow into a $95 billion market by 2025 globally. Although currently the strongest demand for these immersive technologies comes from industries in the creative economy such as video entertainment and gaming, its wider applications have huge potential to improve the efficiency and productivity in the AECO (Architecture, Engineering and Construction and Operations) industry. Construction alone is one of the fastest growing industries in Australia, and is one of the largest contributor to Australian economy, generating 8.7% of total GVA. The potential of XR technology to revolutionise the AECO sector is already widely accepted. However, current implementationsof XR in our sector are quite limited.

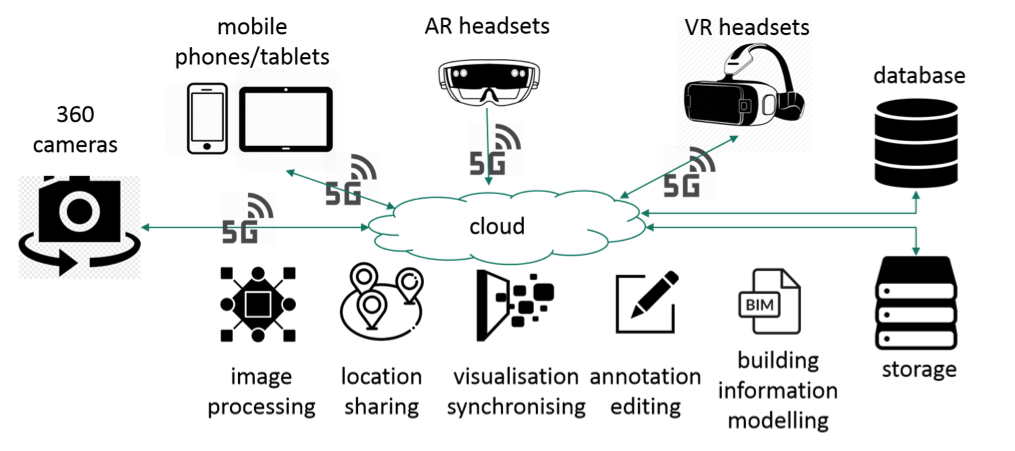

Current work is motivated by the opportunity to visualise architectural models and construction sites and improve design and construction operations. While VR (Virtual Reality) technologies, coupled with other modelling and simulation technologies (e.g. BIM), are mainly used to support design decision making; AR (Augmented Reality) is anticipated to have bigger impact especially on construction operations through reduction of re-work, improving safety, lowering labor cost and meeting deadlines. Current research focus on a number of key areas, such as, in design and construction education and training; construction safety management; supporting construction assembly procedures; and sustainability analysis. Majority of current work focus on immersive visualisations of static information and models. Also, limitations of network bandwidth and latency remain a significant problem to XR experiences. We envisage that the increased bandwidth, speed and improved latency that will soon be provided by the 5G technology will advance unprecedented opportunities for XR technologies to offer novel solutions to improve remote and co-located creative and interactive collaboration of project teams with multimodal information input.

Image processing technology, on the other hand, has already been adopted in the AEC sector for structural component recognition, personnel and equipment detection, action recognition and hazard avoidance. Nevertheless, such technology is mostly used on desktops or laptops at a remote location other than the construction site, with flat video cameras. There is little research that has been done on the processing of images captured by 360 video cameras, and projecting information (that is tagged with locations) onto AR and VR headsets in real time.

Nevertheless, technology alone cannot revolutionise existing practices. What will make a real impact is the new modes of interactionswhich can be facilitated through these technologies, and which will in turn introduce new value propositionsto lead to the desired change and improvements. The future potential of the XR technology will be unlocked when the users can actually interact with dynamic information and models in real-time and across remotely located project teams.

The Anticipated Impact of the Proposed Project:

There is an apparent lack of (and necessity for) research in processing 360 video images in general. This could potentially open up new opportunities and introduce new workflows into the AEC sector, through the facilitation of immersive interactions with targeted objects and information in real-time, especially during remote collaboration of project partners.

The proposed project will investigate in an ongoing research field that explores the disruptive potential of real-time data integration into eXtended reality (XR) applications, including AR/VR/MR, with the power of image processing and pattern recognition, in the AECO (Architecture, Engineering, Construction and Operations) sector. In our research, we develop and introduce the IDC (Immersive Dynamic Collaboration) framework, which brings together the two key ingredients of “real-time image processing” and “eXtended reality” (dynamicand immersive) into the creation of shared, interactive and collaborative workflows.

The proposed project will present exploratory implementations of IDC framework in key stages of a building project life-cycle and provide a critical evaluation of its future potential to boost efficiency, productivity, collaboration and innovation in the AECO sector. The exploratory implementations will be presented 3 use case scenarios which showcase the integration of IDC framework into different workflows during Design, Construction and Operations of buildings, as summarized below:

Scenario 1 (Design): Remotely located project partners working on different sections of the project aim to superimpose their information input in real-time on the same (e.g. physical) model on a remote location. The content of different inputs (e.g. simulation, real-time data, digital models) can be dynamically changed by project participants in remote locations and re-iterated to compare and discuss for accurate decision making for design development phase.

Scenario 2 (Construction): This scenario applies to the workflow at a construction site, among project partners in remote locations. One partner is on the construction site, and the other(s) could be anywhere in the world. The on-site person intends to share information with the project collaborators (e.g. the scanned view of the construction site, with markups generated from 360 image processing) in real-time some of which may be mapped directly onto the on-site person’s view, mapped to the exact location, depending on the kind of information/problem s/he is dealing with.

Scenario 3 (Operations): Currently our building operations (post-occupancy) and systems are not intelligently connected. Changes in building use (e.g. increased occupant movement), or external factors (e.g. external temperature) cannot trigger actions. It is usually quite late by the time a problem is spotted. However, we can link important changes in real-time (tracked through sensors or from image processing and analysis) to prompt action for facility managers by linking IoT data with a virtual building model (for example, an abstract 3D model of the building) where real-time changes that require “Action” by the facility manager would prompt certain changes (e.g. colours) on the model (e.g. 3D or 2D) in real-time, requesting attention or action. A MR (Mixed Reality) device (e.g. Hololens) worn by a team member (on site) can display those areas of attention on the model, and mapped onto the real world, in real-time.

The aforementioned use case scenarios will be further developed and evaluated according to the value the IDC framework brings to each (e.g. efficiency, productivity, accuracy, creativity).

Project Team (Deakin):

Tuba Kocaturk

Rui Wang

Phillip Roos